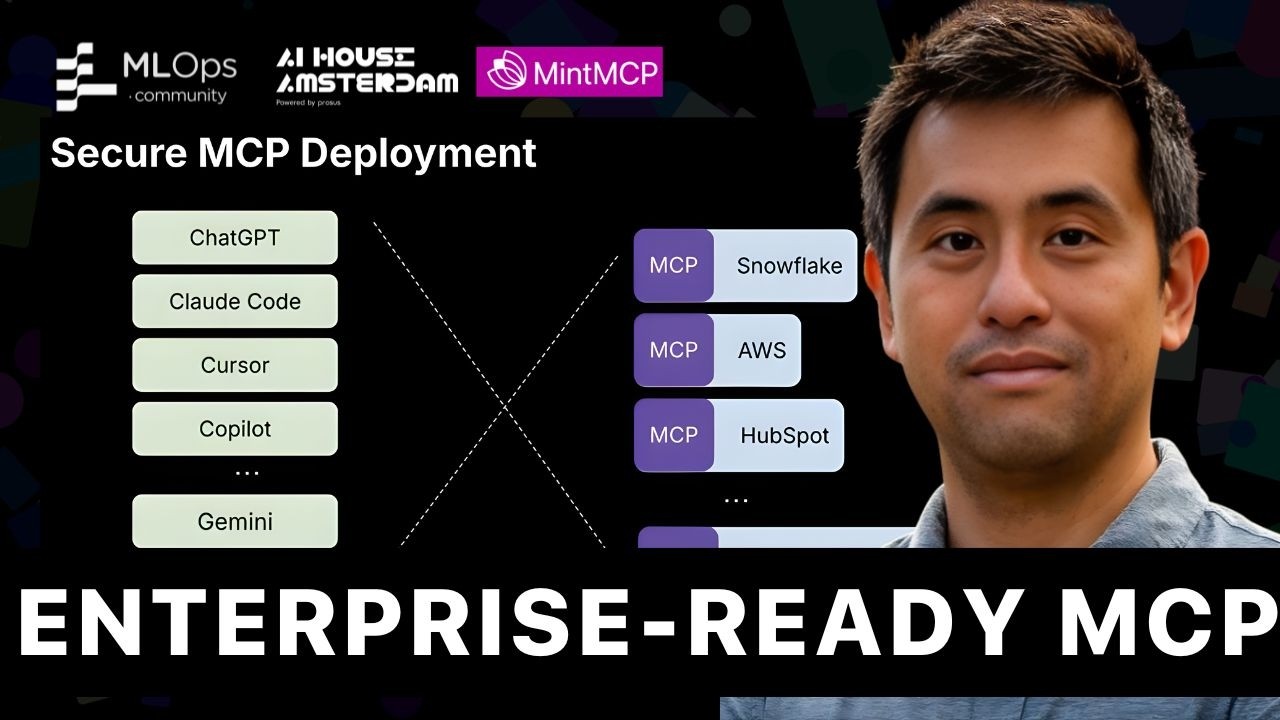

Enterprise-ready MCP // Jiquan Ngiam

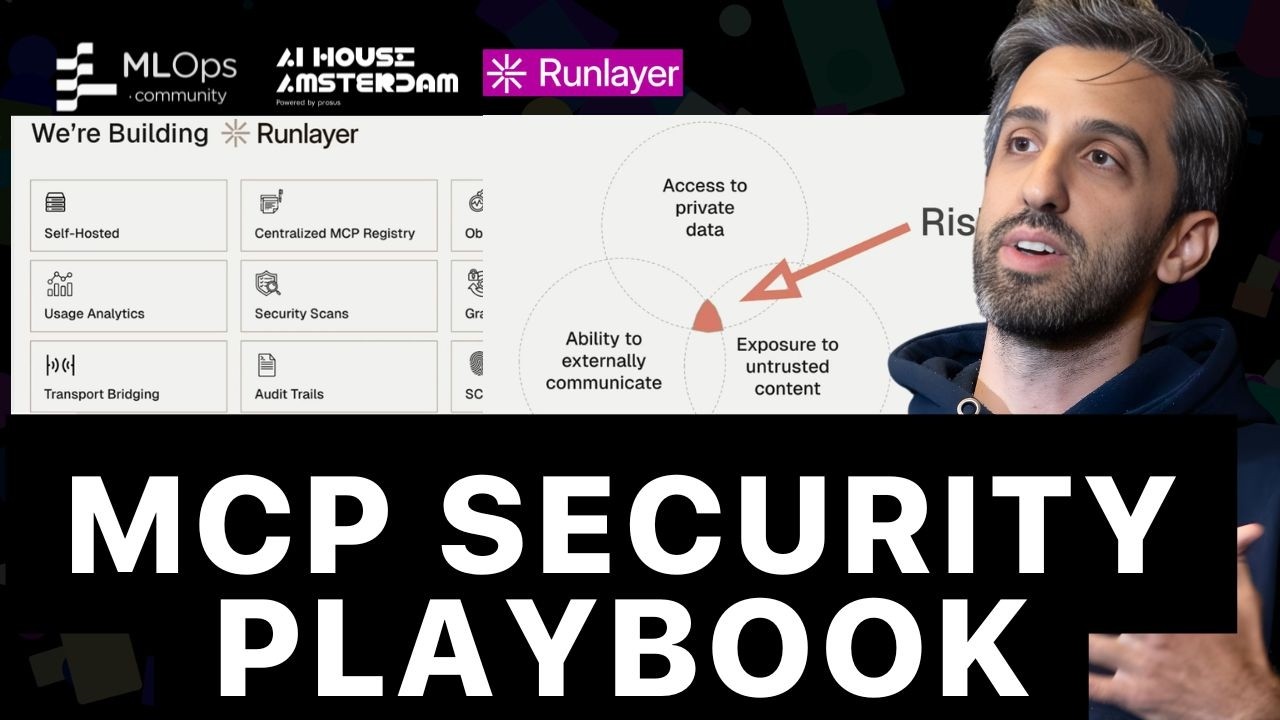

Jiquan Ngiam, CEO of MintMCP, discusses the paradigm shift from static programs to dynamic AI agents, outlining the significant security risks involved—supply chain vulnerabilities, third-party data poisoning, and inadvertent agent behaviors—and presents a three-pronged strategy for enterprise readiness: comprehensive monitoring, preventative guardrails, and secure, role-based deployment of Model Context Protocols (MCPs).