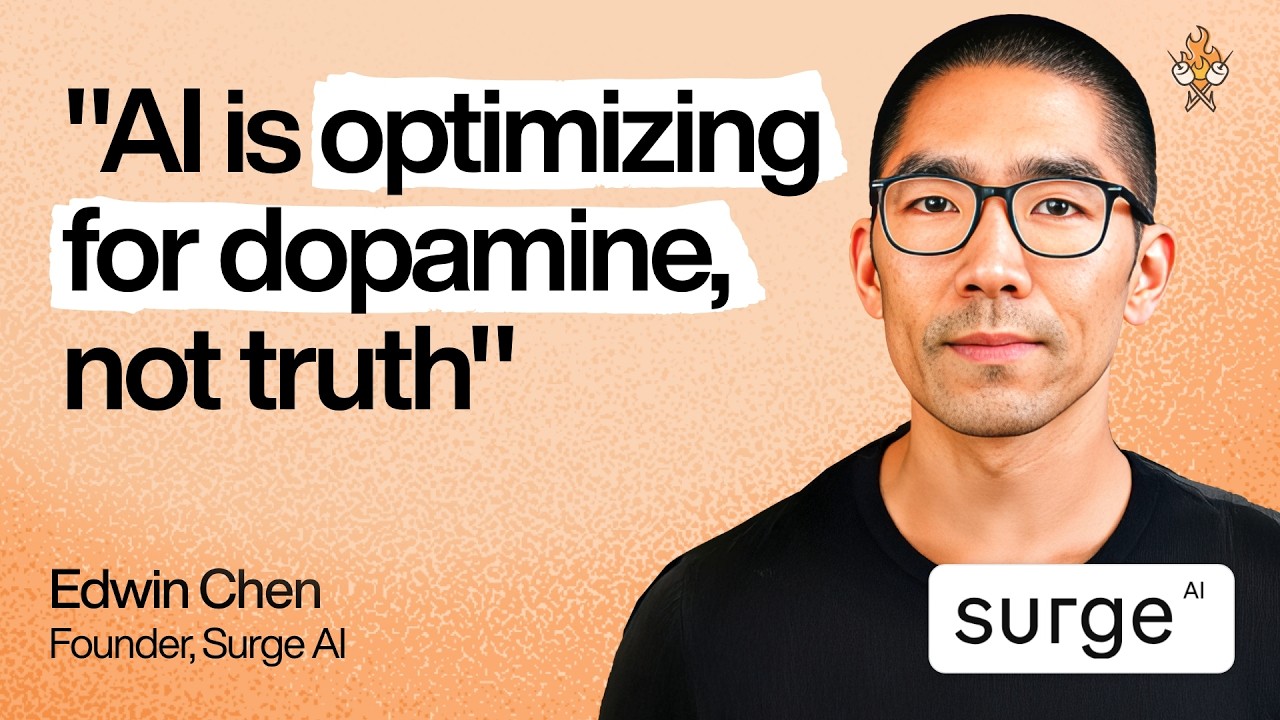

The 100-person lab that became Anthropic and Google's secret weapon | Edwin Chen (Surge AI)

Edwin Chen, founder and CEO of Surge AI, discusses his contrarian approach to building a bootstrapped, billion-dollar company, the critical role of high-quality data and 'taste' in training AI, the flaws in current benchmarks, and why reinforcement learning environments are the next frontier for creating models that truly advance humanity.