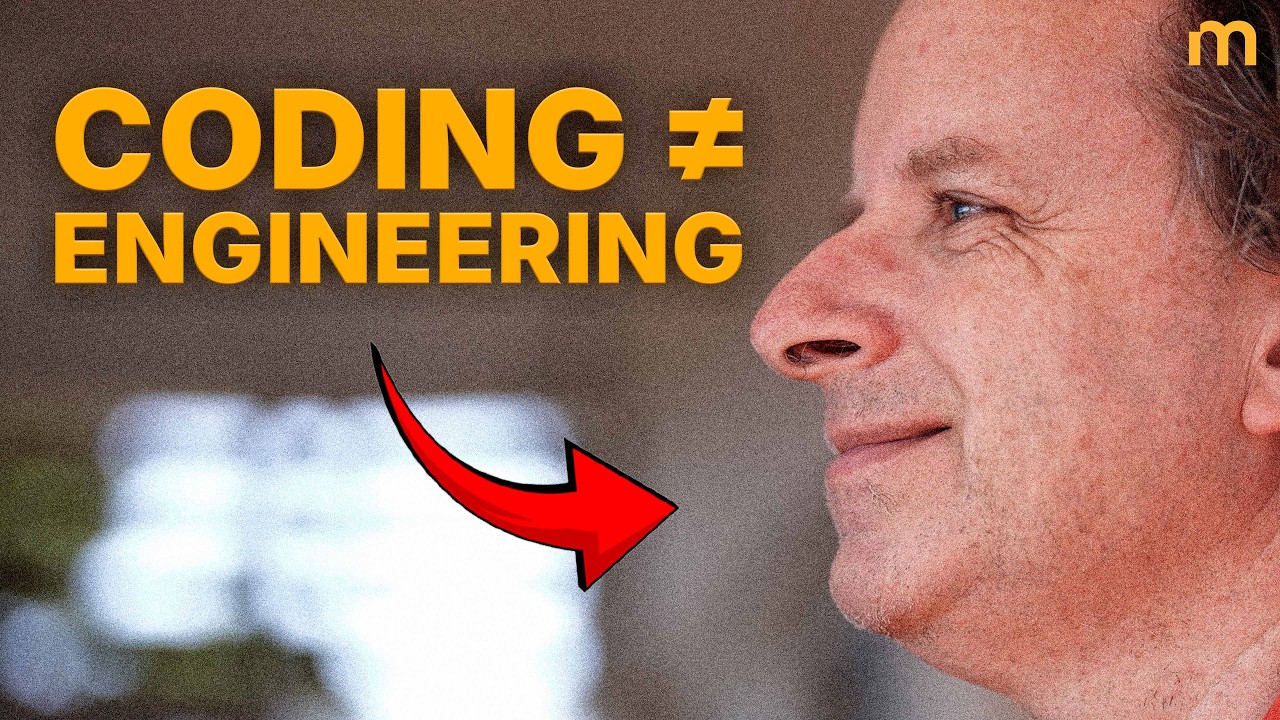

"Vibe Coding is a Slot Machine" - Jeremy Howard

fast.ai founder Jeremy Howard critiques the 'vibe coding' illusion, arguing that AI-assisted tools create a slot machine-like experience that erodes true software engineering skills. He revisits the origins of ULMFiT, champions interactive programming for building intuition, and reframes AI risk from existential threats to the dangers of power centralization and human enfeeblement.