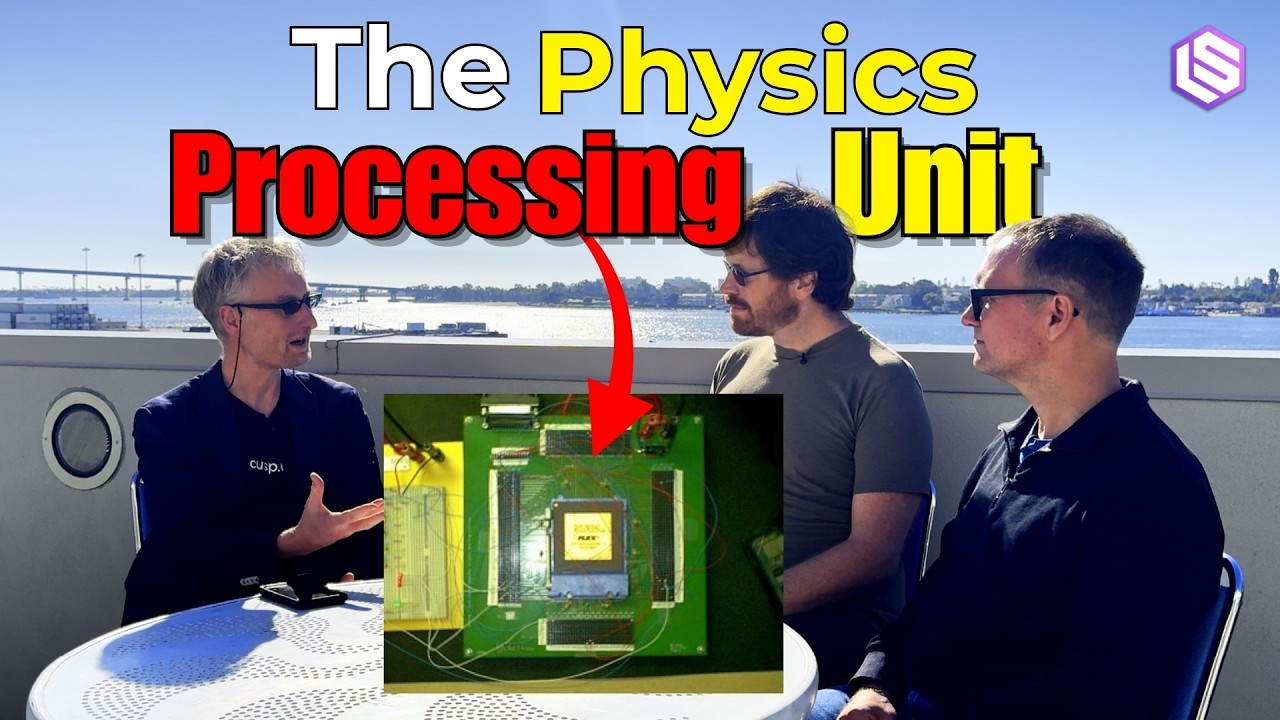

🔬Max Welling: Materials Underlie Everything

Max Welling connects the dots between quantum gravity, equivariant neural networks, and diffusion models, explaining how these concepts from theoretical physics are now powering a new generation of AI for materials discovery to tackle climate change. He introduces the concept of a "Physics Processing Unit" and details the architecture of his startup, CuspAI.