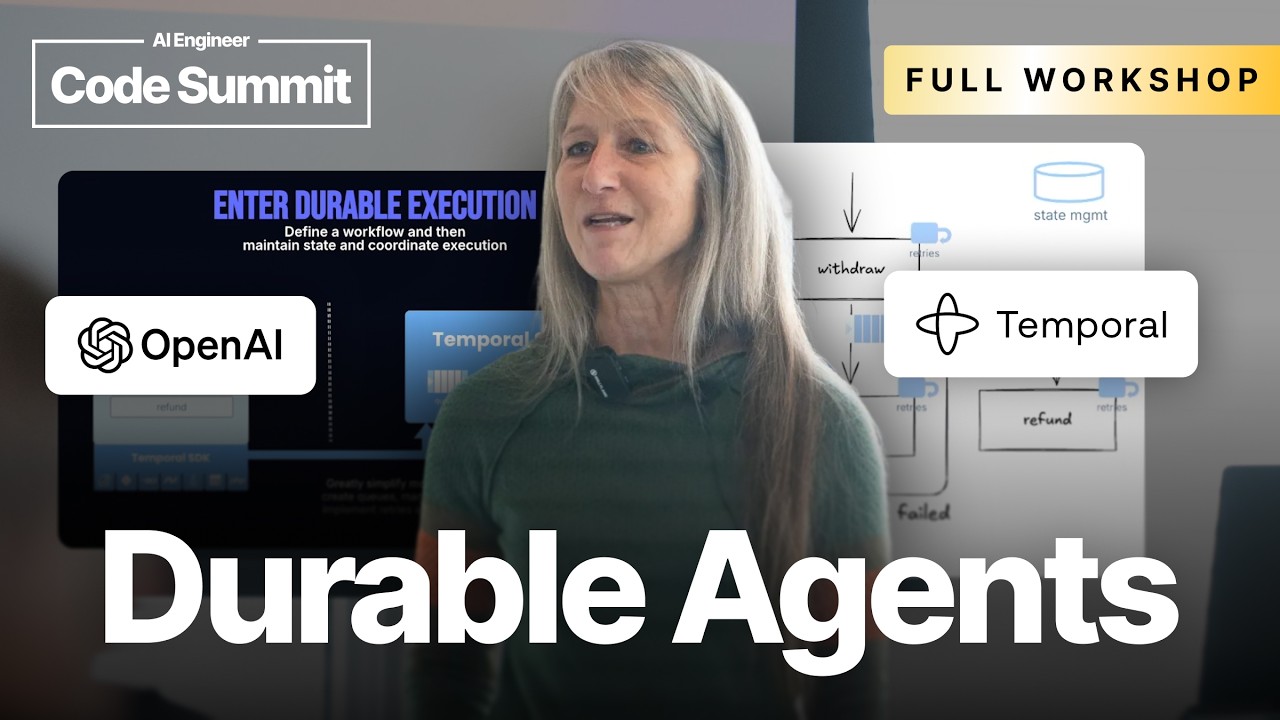

OpenAI + @Temporalio : Building Durable, Production Ready Agents - Cornelia Davis, Temporal

Explore how Temporal, a durable execution framework, brings resilience and scalability to AI agents built with the OpenAI Agents SDK. This summary covers Temporal's core concepts of Workflows and Activities, the official integration that makes OpenAI agents durable, and patterns for orchestrating multiple micro-agents.