Intern talk: Distilling Self-Supervised-Learning-Based Speech Quality Assessment into Compact Models

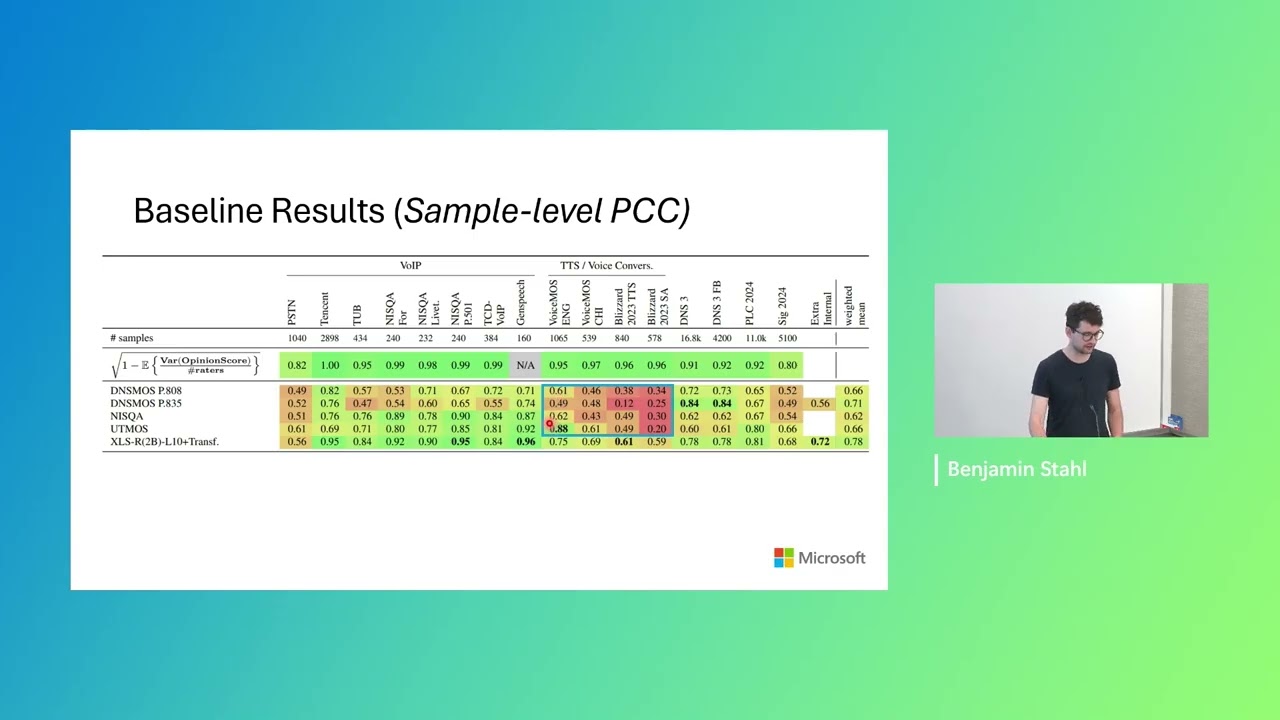

This research explores the distillation and pruning of large, self-supervised speech quality assessment models into compact and efficient versions. Starting with the high-performing but large XLSR-SQA model, the work details a process of knowledge distillation using a teacher-student framework with a diverse, on-the-fly generated dataset. The resulting compact models successfully close over half the performance gap to the teacher, making them suitable for on-device and production applications where model size is a critical constraint.