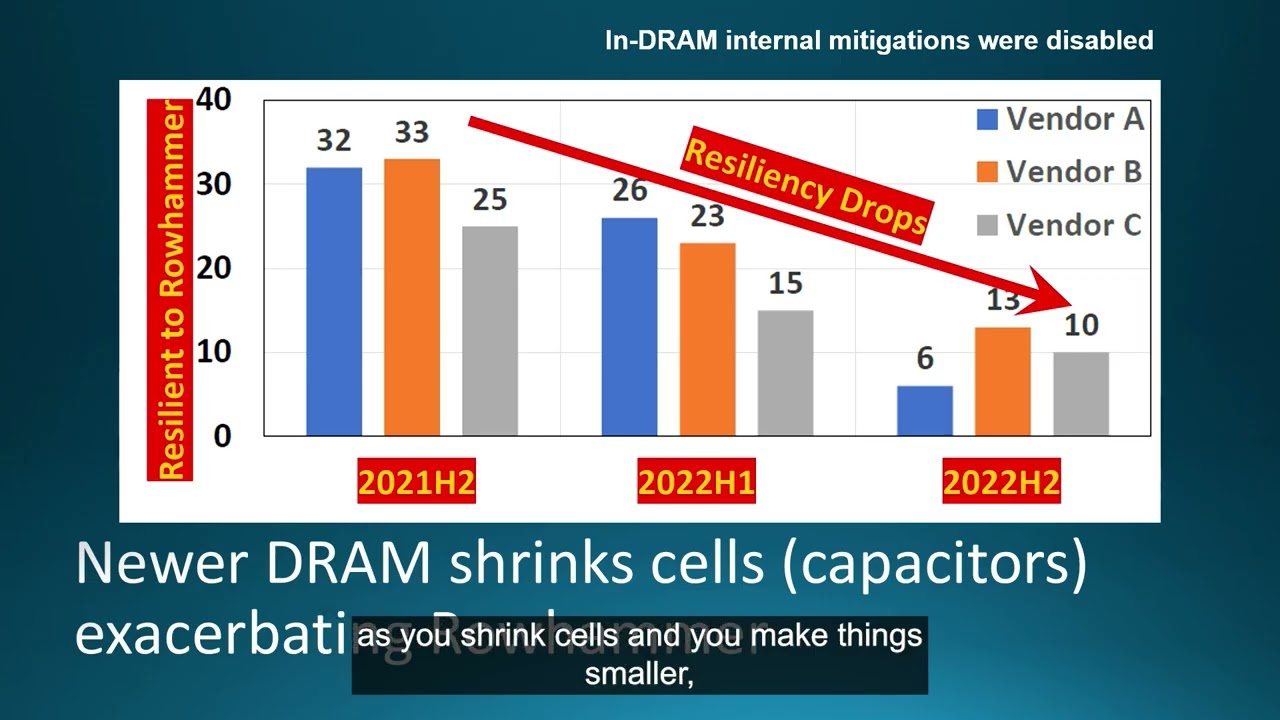

Six Years of Rowhammer: Breakthroughs and Future Directions

Stefan Saroiu from Microsoft Research details Project STEMA's six-year journey tackling the DRAM security flaw, Rowhammer. He discusses how academic research kept the industry honest about DDR4 vulnerabilities, the development of their in-DRAM defense, Panopticon, and its evolution into the industry standard PRAC for DDR5, while highlighting that significant challenges and research opportunities remain.