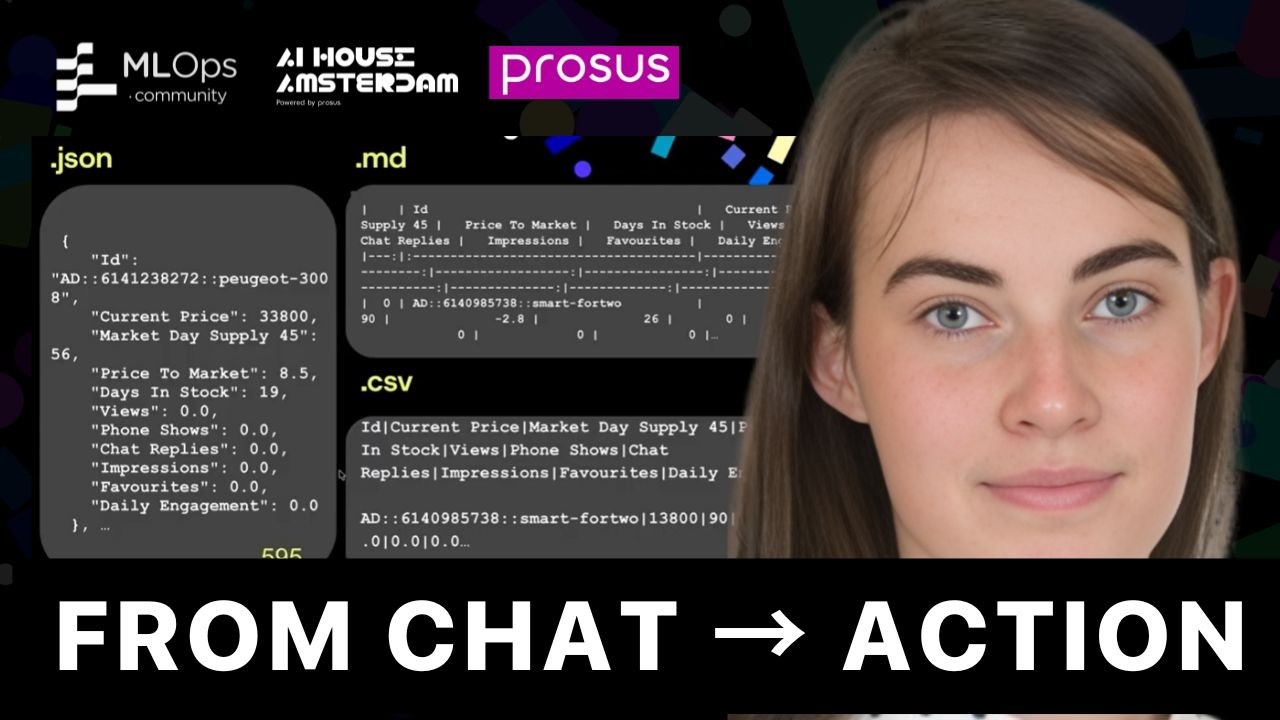

From Chat Fatigue to Instant Action // Donné Stevenson

A discussion on the evolution of AI agent interaction, moving beyond simple text-based chat to create intuitive, GUI-driven experiences. The talk covers the practical challenges and solutions in building an impactful agent for busy professionals, focusing on quick actions, efficient data streaming, and enhanced interactivity.