Evaluating Privacy Policies under Modern Privacy Laws At Scale: An LLM-Based Automated Approach

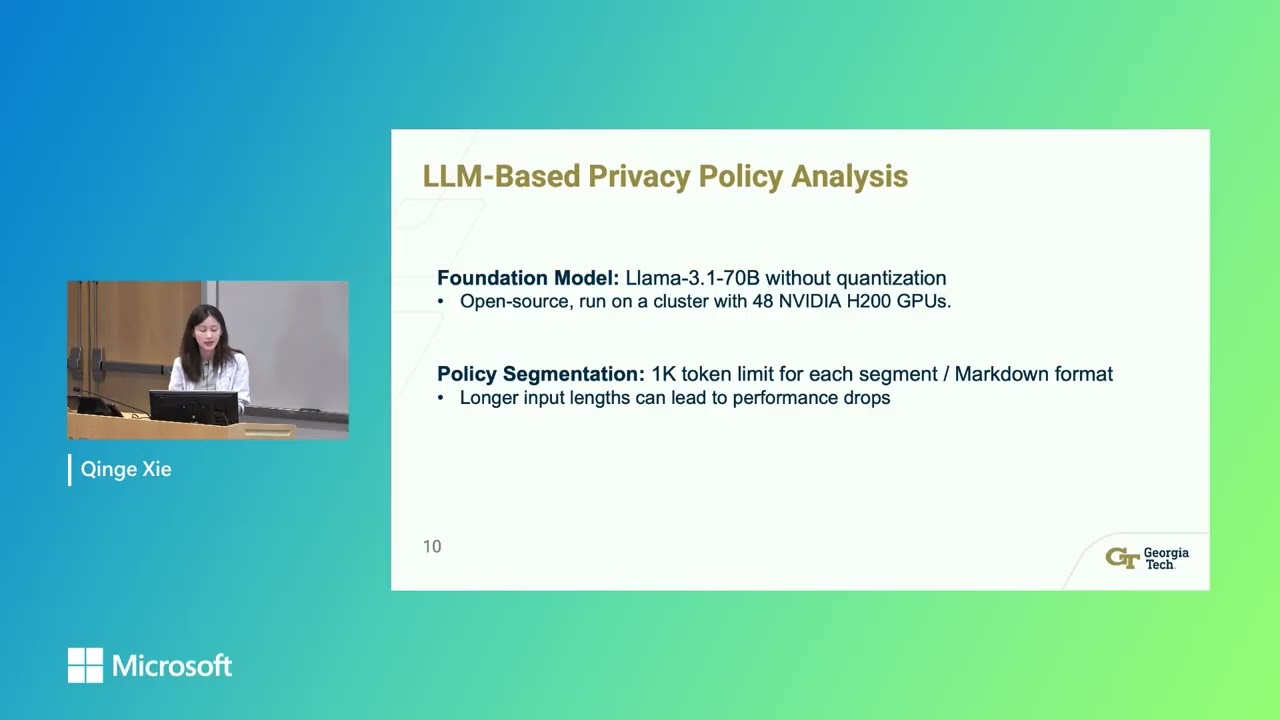

Qinge Xie from Georgia Tech presents a large-scale evaluation of modern website privacy policies using a novel LLM-based framework. The research systematizes privacy practices from 10 major US and EU regulations into 34 clauses and analyzes over 100,000 websites to reveal current trends in data collection, sharing, and consumer rights disclosure.