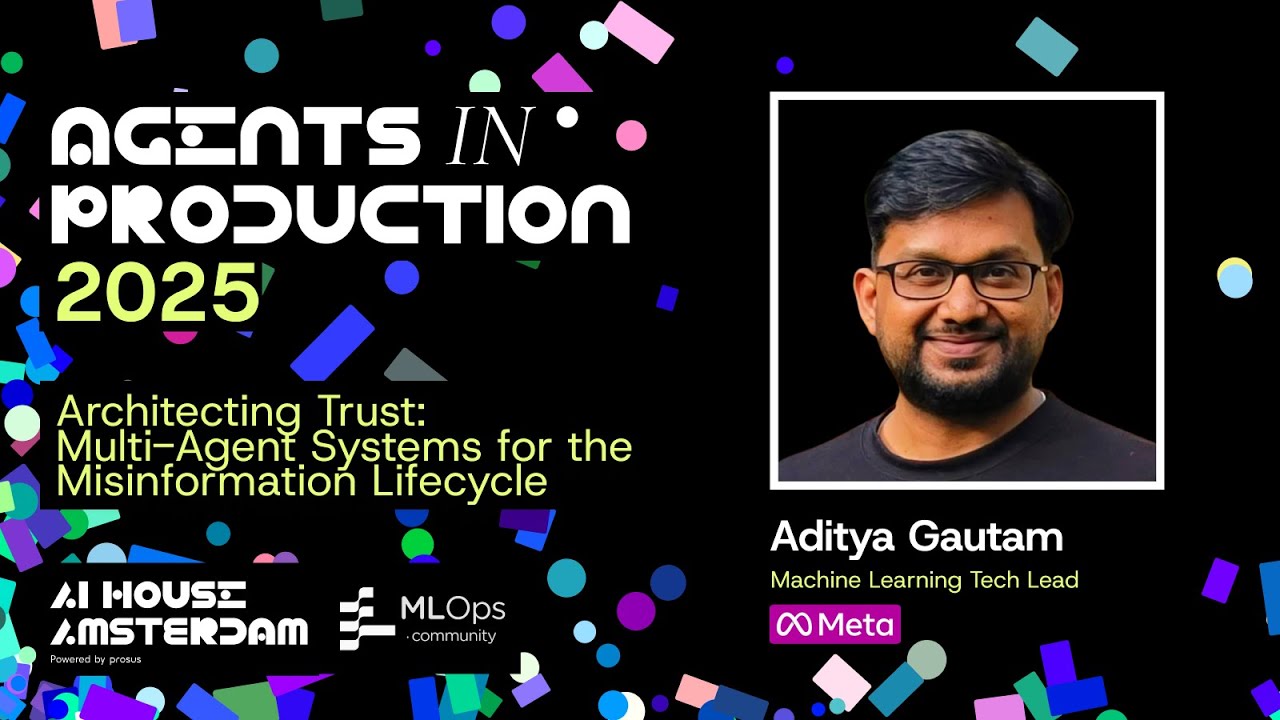

Multi-Agent Systems for the Misinformation Lifecycle

A detailed overview of a modular, five-agent system designed to combat the entire lifecycle of digital misinformation. Based on an ICWSM research paper, this practitioner's guide details the roles of the Classifier, Indexer, Extractor, Corrector, and Verifier agents. The system emphasizes scalability, explainability, and high precision, moving beyond the limitations of single-LLM solutions. The talk covers the complete blueprint, from agent coordination and MLOps to holistic evaluation and optimization strategies for production environments.